The digital world is increasingly grappling with the issue of AI-generated fakes. The misuse of generative artificial intelligence tools has led to a surge in deceptive images, videos, and audio content online.

The prevalence of AI deepfakes, featuring figures ranging from Taylor Swift to Donald Trump, is making it increasingly challenging to distinguish between real and fabricated content. Tools like DALL-E, Midjourney, and OpenAI’s Sora have simplified the creation of deepfakes for individuals lacking technical expertise – a simple command is all it takes.

While these counterfeit images may appear innocuous, they can be exploited for scams

While these counterfeit images may appear innocuous, they can be exploited for scams, identity theft, propaganda, and even election manipulation.

Detecting a Deepfake

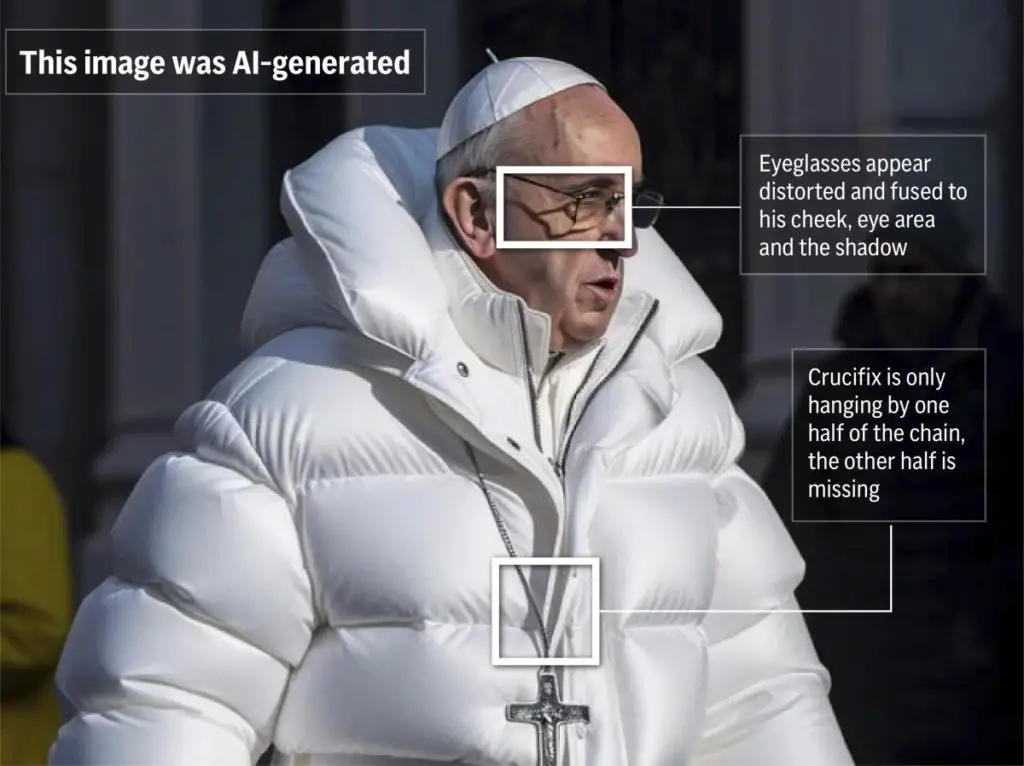

In the initial stages of deepfakes, the technology was imperfect and often left clear signs of tampering. Fact-checkers have highlighted images with glaring mistakes, such as hands with six fingers or eyeglasses with mismatched lenses.

However, as AI has advanced, detection has become more difficult. Some previously reliable tips, like watching for unnatural blinking patterns in deepfake videos, are no longer valid, according to Henry Ajder, the founder of consulting firm Latent Space Advisory and a renowned expert in generative AI.

Nevertheless, Ajder suggests a few indicators to watch out for.

Many AI deepfake photos, particularly of people, exhibit an electronic gloss, a kind of “aesthetic smoothing effect” that results in skin that appears “exceptionally polished,” according to Ajder. He cautions, though, that creative prompting can sometimes eliminate this and many other signs of AI manipulation.

Examine the consistency of shadows and lighting. Often, the subject is in sharp focus and appears convincingly real, but elements in the background may not be as realistic or refined.

Examining the Faces

Face-swapping is a common method used in deepfakes. Experts recommend scrutinizing the edges of the face. Does the facial skin tone match the rest of the head or body? Are the edges of the face sharp or blurry?

If you suspect a video of a person speaking has been altered, observe their mouth. Do their lip movements align perfectly with the audio?

If you suspect a video of a person speaking has been altered, observe their mouth. Do their lip movements align perfectly with the audio? Ajder suggests examining the teeth. Are they clear, or are they blurry and inconsistent with how they appear in reality?

Cybersecurity firm Norton states that algorithms may not yet be advanced enough to generate individual teeth, so the absence of outlines for individual teeth could be a clue.

Considering the Context

Sometimes, the context is important. Pause to consider whether what you’re seeing is believable.

The Poynter journalism website suggests that if you see a public figure doing something that seems “overblown, unrealistic, or out of character,” it could be a deepfake.

For instance, would the Pope really be wearing a luxury puffer jacket, as depicted by this notorious fake photo? If he did, wouldn’t there be additional photos or videos published by credible sources?

Using AI to Detect Fakes

Another strategy is to use AI to combat AI. Microsoft has created an authenticator tool that can analyze photos or videos to provide a confidence score on whether it’s been manipulated. Chipmaker Intel’s FakeCatcher uses algorithms to analyze an image’s pixels to determine if it’s real or fake.

There are online tools that claim to detect fakes if you upload a file or paste a link to the suspicious material. However, some, like Microsoft’s authenticator, are only available to selected partners and not the public. This is because researchers don’t want to give bad actors an advantage in the deepfake arms race.

Open access to detection tools could also give people the impression they are “godlike technologies that can outsource the critical thinking for us” when instead we need to be aware of their limitations, Ajder said.

Challenges in Detecting Fakes

With all this in mind, it’s important to remember that artificial intelligence is advancing at an unprecedented pace, and AI models are being trained on internet data to produce increasingly higher-quality content with fewer flaws.

AI models are being trained on internet data to produce increasingly higher-quality content with fewer flaws

This means there’s no guarantee this advice will still be valid even a year from now. Experts warn that it might even be risky to expect ordinary people to become digital detectives because it could give them a false sense of confidence as it becomes increasingly difficult, even for trained eyes, to spot deepfakes.

Discover more from MUZZLECAREERS

Subscribe to get the latest posts sent to your email.

This is a fascinating article, but some aspects feel a little outdated. Don’t get me wrong, the whole “spotting deepfakes by looking for blurry teeth” thing is good to know, but what about more sophisticated techniques? We need future-proofed solutions, not yesterday’s tricks.

This is a scary thought – AI-generated deepfakes getting so good that even experts struggle to tell them apart. Just look at the “Pope in a puffer jacket” thing? Crazy how something so obviously fake could almost trick you. Gotta be super vigilant these days…

Maybe there should be some kind of digital watermarking system for online content. Something that verifies the source and authenticity of an image or video. Like a tamper-proof seal in the digital age.

Maybe the answer lies in a multi-pronged approach. A combination of advanced AI detection, digital watermarks for verified content origin, and yes, even some good old-fashioned critical thinking skills. Tech companies need to develop better detection algorithms that stay ahead of the curve, not tools locked away behind paywalls or limited access.

This deepfake stuff is creepy… Like, what’s even real anymore on the internet? Feels like we’re living in a simulation where nothing is what it seems. Maybe we should all just switch to VR and call it a day…

I think maybe the best defense is a healthy dose of skepticism. If something seems too outlandish to be true online, it probably is.